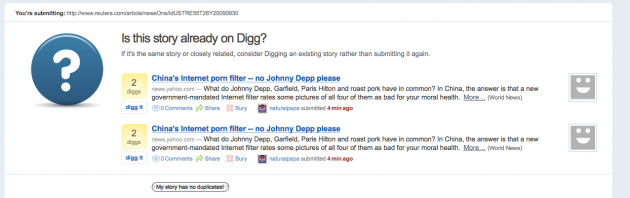

Since its inception, one of the biggest problems with Digg has been that users often submit the same content over and over again. This makes it harder for cool content to become popular because some users digg one submitted story, while some digg another. Today, Digg is releasing “several major updates” to its duplicate (known as a “dupe”) detection system.

Since its inception, one of the biggest problems with Digg has been that users often submit the same content over and over again. This makes it harder for cool content to become popular because some users digg one submitted story, while some digg another. Today, Digg is releasing “several major updates” to its duplicate (known as a “dupe”) detection system.

The solution sounds fairly intensive. “To better understand the nature of the problem, we analyzed the types of duplicate stories being submitted. Most common are the same stories from the same site, but with different URLs. Our R&D team came up with a solution that identifies these types of duplicates by using a document similarity algorithm,” Digg’s Director of Product Chris Howard writes in a blog post. He goes on to say that there will be a follow-up more technical post to explain a bit more about how this actually works, but says that it has proven to be a reliable system so far.

But the really tricky stuff comes when people submit the same story from a different site. This is a gray area because of course some sites have different takes on the same topic, and whose to say which is more Digg-worthy than another? Digg now says it will scan for descriptive information such as the story’s title to see if something very similar is already in the system. But still, it’s a gray area.

At least the submission process should be faster now. Digg will run these dupe checks after you enter the URL but before you enter the description, which saves a step in the process. It claims this dupe detection will take only “a few seconds.”

And if you ignore the dupe algorithms and submit dupe stories anyway, Digg is watching: “We’ll also be monitoring when certain Diggers choose to bypass high-confidence duplicates and will use this data to continue to improve the process going forward.”

[photo: flickr/yogi]