We’ve been a bit baffled by the system Apple has in place when it comes to ratings for applications in the App Store. Is it allowing apps with nudity? Not allowing them? Allowing them with a 17+ rating? We’ve talked to some developers willing to break their NDAs because they think the App Store approval process in general is messed up, and would like to see Apple do a better job handling it. So here’s how the ratings system currently works for the App Store.

We’ve been a bit baffled by the system Apple has in place when it comes to ratings for applications in the App Store. Is it allowing apps with nudity? Not allowing them? Allowing them with a 17+ rating? We’ve talked to some developers willing to break their NDAs because they think the App Store approval process in general is messed up, and would like to see Apple do a better job handling it. So here’s how the ratings system currently works for the App Store.

The Ratings

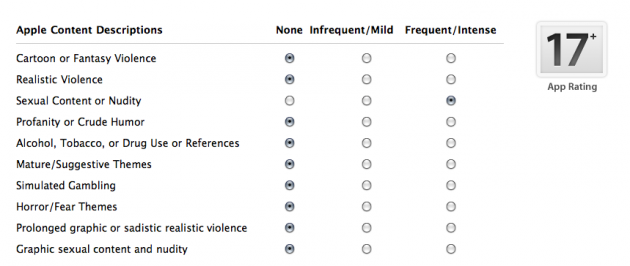

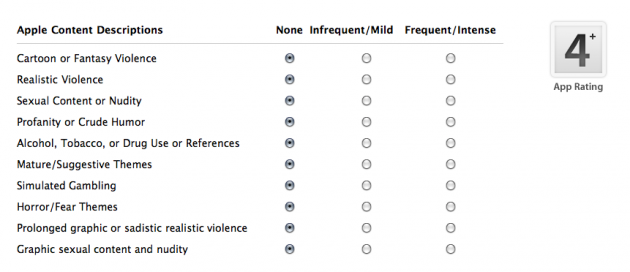

When you go to submit your app through iTunes Connect, one of the steps takes you to a ratings matrix that you must fill out. This contains 10 questions listed under “Apple Content Descriptions.” For each of the 10 questions you must say “None”, “Infrequent/Mild”, or “Frequent/Intense.” Depending on what answer you give for each of these, the rating of your app in the upper right corner will change. These ratings go from “4+” to “9+” to “12+” to “17+” to “No Rating.”

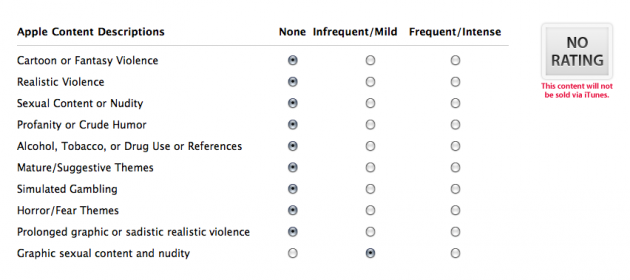

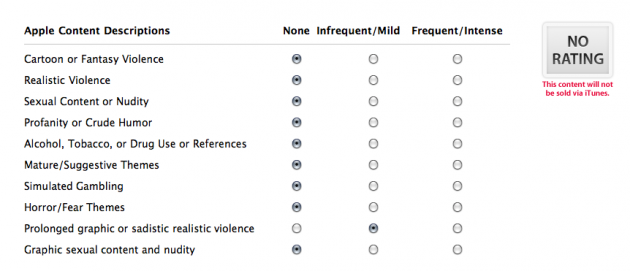

That last one is key. If your app gets the “No Rating” label, a warning written in red appears underneath it stating that: “This content will not be sold via iTunes.” So what triggers such a rating? Well, not a lot. Basically, it comes down to the final two questions in the 10 question matrix. Let’s run through them in descending order:

- Cartoon or Fantasy Violence

- Realistic Violence

- Sexual Content or Nudity

- Profanity or Crude Humor

- Alcohol, Tobacco, or Drug Use or References

- Mature/Suggestive Themes

- Simulated Gambling

- Horror/Fear Themes

- Prolonged graphic or sadistic realistic violence

- Graphic sexual content and nudity

As I noted, those last two are the keys to getting your app banned. But there’s a few interesting things about this. First of all, you may notice that these final two are not capitalized in the same way that the other questions are. That suggests to me that Apple added them at a different time than all the others and possibly even in a rush.

Second, you’ll notice that there’s a question about both “Sexual Content or Nudity” and “Graphic sexual content or nudity.” What’s interesting about this is that apps with “Sexual Content or Nudity” are still allowed — even if you select “Frequent/Intense” in that field. You’ll get a 17+ rating, but your app will still be allowed. However, if you click even “Infrequent/Mild” in the “Graphic sexual content and nudity,” your app is banned. I’m not sure what the difference is between “intense sexual content and nudity” and “mild graphic sexual content and nudity”, and neither do a lot of developers.

The Gray Area

And while you might think that since apps can be classified as having frequent/intense sexual content or nudity, that an app with topless girls would be okay. But apparently, it’s not. In Apple’s words:

Apple will not distribute applications that contain inappropriate content, such as pornography.

So, then what exactly is frequent/intense sexual content or nudity that is allowed? One developer we spoke with believes Apple may be intending that for applications to feature things like sexual education. If so, that is hilarious. Why would only people over the age of 17 be allowed to look at such apps?

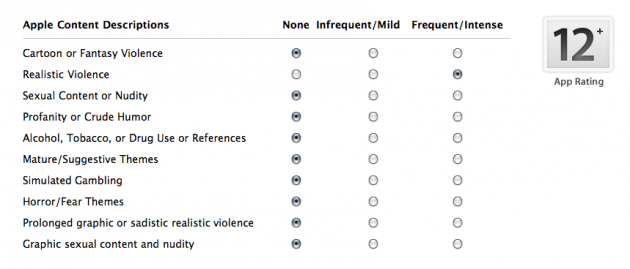

The questions that pertain to violence are just as bad — maybe even worse. I understand that there’s a difference between cartoon violence and realistic violence, but both of those are allowed. How “frequent/intense realistic violence” differs from “mild prolonged graphic violence”, seems again like a pretty big gray area. Yet one is allowed, and one isn’t.

And not only is one allowed, “mild realistic violence” carries only a 9+ rating. “Intense realistic violence” carries only a 12+ rating. Apparently, the jump from “intense realistic violence” to “mild prolonged graphic violence” means skipping over the 17+ rating entirely, and going straight to banned. That makes no sense.

That’s the key point to all of this: The ratings range from making no sense to having way too much gray area. Apple is expecting developers to rate their apps correctly, but if it simply doesn’t allow anything in the last two categories to get through, of course those developers are going to wiggle their apps into the “safe” categories. Any why shouldn’t they? A lot of those definitions appear to be the exact same.

And that’s probably why we’re seeing a lot of apps that aren’t supposed to get through, slip through the system. Flat out: The system is broken.

When the Hottest Girls app got through, just look at the rating that was attached to it:

Rated 17+ for the following:

Frequent/Intense Sexual Content or Nudity

Frequent/Intense Mature/Suggestive Themes

That seems like a reasonable rating for an app with topless girls. But apparently, Apple wanted it rated under “Frequent/Intense Graphic sexual content and nudity” — meaning it wouldn’t have been allowed in the App Store. (Though, to be clear, according to Apple, the app in question was tricky and added content to the app after Apple approved it. But it was just more topless girls, and so the main point remains the same.)

And at the same time, Apple is letting in apps that say they have topless pictures right in the title of the app. If it’s aiming to ban all of them, it’s doing a pretty awful job.

The Hypocrisy

Perhaps my favorite thing about all of this though is the hypocrisy that is staring back at each one of us who have an iPhone or iPod touch. Load up iTunes on the device, you can buy any number of movies that have plenty of nudity, sex, sadistic violence, prolonged violence, and any combination of them. Yet if you want an app that has any of those, forget about it.

I can understand why Apple would want to restrict mature apps before it had parental controls in place for them, but now it has those in place — there should be no reason why an adult shouldn’t be allowed to get an application with nudity in it if they want. Especially if that same type of content is available on the device through movies in the iTunes store. (Not to mention through any number of websites using the Safari browser.)

I understand that the store is run by Apple and it has the right to accept or reject whatever content it wishes, but again, this is about an absurd gray area for developers and hypocrisy. The gray area, I believe, is making app screeners lives a living hell, and all of us have to suffer for it. I can’t tell you how many emails we get from developers complaining that their apps have been in Apple’s approval queue for weeks or months with no response. Some of these developers are hoping to make a living off of these apps, yet Apple is backlogged in the approval process because it has to check for things like a certain level of nudity in an app, rather than letting the rating system do its job.

Apple hasn’t responded to multiple attempts to contact it on this matter. Frankly, I don’t think it knows what it really wants to do in this regard. Judging by its own rating system, it wants to allow “Nudity” with a capital “N,” but not “nudity.” Or maybe it’s that the nudity can’t be “graphic.” But how are topless pictures graphic? And if those are graphic, what is non-graphic nudity? Maybe it means that it wants to allow for “tasteful” nudity, but again, that’s a big gray area. Is Apple — and by Apple I mean app screeners — now going to be arbiters of taste? As if they needed any more to do.

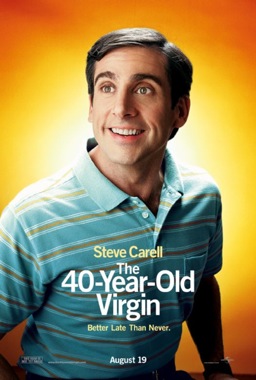

I think what Apple really wants to see is the image below. And that’s too bad.