Lately Twitter has been cleaning house, raising money, doing interviews and actually talking to users. In a blog post last week they did a Q&A session, directly answering questions about Twitter’s architecture.

So I have a couple of questions, too, based on a couple of discussions I’ve had with people who say they’ve seen Twitter’s architecture.

- Is it true that you only have a single master MySQL server running replication to two slaves, and the architecture doesn’t auto-switch to a hot backup when the master goes down?

- Do you really have a grand total of three physical database machines that are POWERING ALL OF TWITTER?

- Is it true that the only way you can keep Twitter alive is to have somebody sit there and watch it constantly, and then manually switch databases over and re-build when one of the slaves fail?

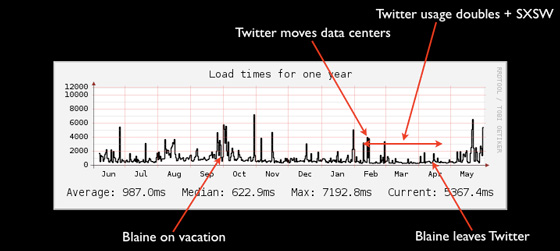

- Is that why most of your major outages can be traced to periods of time when former Chief Architect/server watcher Blaine Cook wasn’t there to sit and monitor the system?

- Given the record-beating outages Twitter saw in May after Cook was dismissed, is anyone there capable of keeping Twitter live?

- How long will it be until you are able to undo the damage Cook has caused to Twitter and the community?

Update: Twitter continues to be annoyingly and constructively responsive to criticism. They respond to this post here, saying “We’re working on a better architecture.” Kind of takes the air out of the balloon when you can’t get them riled up.